Responsible AI in Enterprise Applications: A Practitioner's View

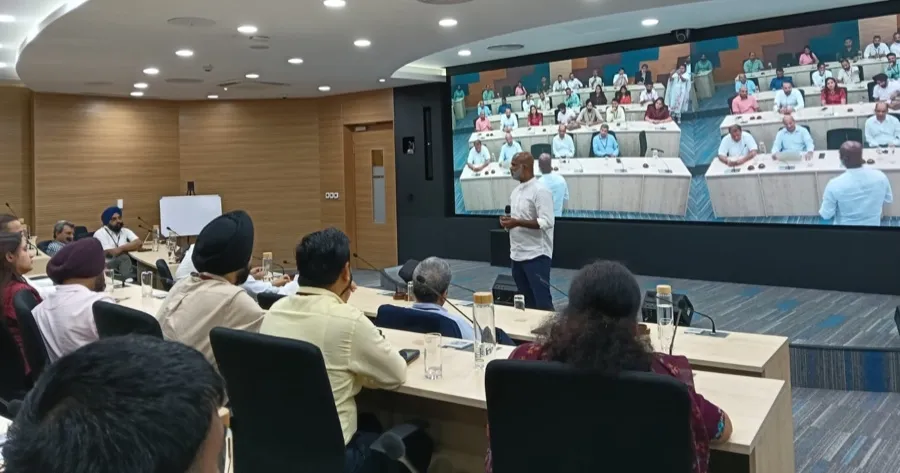

I gave a talk on this topic at the local chapter of the NASSCOM CXO Breakfast in Chandigarh. This post is an edited version of that talk.

You’ve heard the quote: "In theory, there's no difference between theory and practice. But in practice, there is."

Nowhere is that more true than in Responsible AI.

When you Google Responsible AI, you’ll get a clean list of ideals — fairness, transparency, explainability, safety, privacy, non-discrimination, robustness. Great aspirations. But the minute you try to apply them in a real enterprise setting, they collide with business priorities, data limitations, and product pressure.

Let me give you an analogy. If I ask a corporate group, “Should we bribe?”, everyone says no. It's unethical, it harms society. But if I ask any decent sized Indian adult group, “Have you ever bribed?”, the answer changes. Because reality is messy. On these shaky foundations, we have built successful careers and businesses.

Same with Responsible AI. We’re building on models like OpenAI, Claude, or Perplexity. These models are trained on data we don’t fully understand. There are lawsuits pending: The New York Times, and Getty. Even Perplexity’s CEO, Aravind Srinivas, has said they rely on third-party data providers, so we don’t know the provenance or fairness of the data. And yet, we still have to build.

The best we can do is build responsibly at the application layer, even if the foundation is shaky.

# Two Kinds of Enterprise AI

I divide AI use in enterprises into two categories:

- Internal-facing applications – employee productivity, SDLC, copilots.

- External-facing applications – chatbots, sales enablement, customer service.

Each has different risks. Each needs its own flavor of governance.

# NIST AI RMF

We use the NIST AI Risk Management Framework (RMF) to guide both. NIST (National Institute of Standards and Technology) is a U.S. standards body that has long published frameworks for cybersecurity and risk management. We’ve used their cybersecurity framework before, so when it came to AI, adopting their AI RMF felt like a natural step. The RMF provides a structured, repeatable way to identify and mitigate AI risks, and more importantly, to build a culture of responsible AI use.

Here’s how the AI RMF breaks down:

# Govern

Purpose: Establishes policies, processes, and organizational structures to foster a culture of AI risk management, ensuring accountability and alignment with ethical and legal standards.

Key Actions:

- Define clear policies, standards, and risk tolerance levels

- Promote documentation and accountability across AI actors (e.g., developers, users, evaluators)

- Engage stakeholders (legal, IT, compliance, etc.) to integrate risk management into organizational culture

# Map

Purpose: Identifies and contextualizes AI risks by mapping them to specific systems, use cases, and stakeholders to understand potential impacts and pitfalls.

Key Actions:

- Identify ethical, regulatory, or societal risks (e.g., bias, privacy violations)

- Assess how AI systems align with organizational goals and societal values

- Document system functionality and potential failure points

# Measure

Purpose: Assesses AI risks using qualitative, quantitative, or mixed methods to evaluate system performance, trustworthiness, and impact.

Key Actions:

- Use tools to measure risks like bias, inaccuracy, or security vulnerabilities

- Document system functionality and monitor for unintended consequences

- Prioritize risks based on their likelihood and impact

# Manage

Purpose: Implements strategies to mitigate identified risks, monitor systems, and respond to incidents, ensuring continuous improvement.

Key Actions:

- Apply technical and procedural controls (e.g., adjusting algorithms, enhancing data privacy)

- Develop incident response plans for AI-related issues (e.g., data breaches, ethical concerns)

- Continuously monitor and update systems as risks evolve

Let’s see how this plays out in practice.

# Internal Use

We’ve rolled out tools like Cursor for internal developer productivity. Here’s how we apply NIST RMF:

- Map where AI is used in the SDLC, code generation, PR suggestions, test automation.

- Measure how much code is accepted without review. What repos are impacted.

- Manage with mandatory peer review and secure linting.

- Govern with access policies, API key rotation, and audit logs.

It’s lighter-touch governance, but you still need clarity on usage boundaries and responsibilities.

# External Use

When AI talks to customers, the risk gets real.

Let’s take an e-commerce chatbot. You apply the same RMF, but deeper:

- Map what users can ask: orders, refunds, pricing, complaints. Decide whether the bot answers only from your internal knowledge base or also fetches from the web. What should it say if details are in the underlying knowledge base?

- Measure what % of queries are answered from KB, how often it hallucinates, customer satisfaction, and tone accuracy.

- Manage responses with fallback logic, set confidence thresholds, and route low-confidence answers to human support.

- Govern tone of voice, disclaimers, incident response ownership, and policy reviews across product, legal, and CX.

This isn’t optional. If you don't govern these things, you’re rolling the dice with your brand.

# Use Case Matrix

Here’s how we frame typical enterprise AI use cases using NIST RMF:

| Use Case | Risk | Reasonable Approach (Using NIST RMF) |

|---|---|---|

| Dev productivity tools | Insecure code, leakage | Map AI touchpoints, manage code reviews, govern tool access |

| Chatbots (embedded AI) | Hallucination, offensive output | Measure accuracy, govern with fallback logic, manage escalation |

| Hiring AI | Bias, legal risk | Map sensitive variables, manage with anonymization, measure fairness |

| Sales Enablement | Misleading content | Govern brand voice, measure tone & facts, review by sales ops |

# Responsible AI Is a Culture Shift

You’re not going to get it right in one go. Especially not with how fast AI is evolving. So treat Responsible AI like any change program:

- Create policies.

- Train repeatedly.

- Set up clear escalation paths.

- Keep a feedback loop between tech teams and leadership.

We may not control the models. But we do control how we use them.

Under: #aieconomy , #frameworks , #llms , #tech , #talks